PC gaming change focus just to APUs and Laptops? We should stop thinking of GPU's as gaming hardware. Change my mind.

Given the supply constraints another user hit the nail on the head.

3 hours ago, whm1974 said:Most Gamers will simply use whatever dGPU they already have and upgrade when the Market becomes Stable.

Which given the current supply constraints is all anyone should plan on. Look at the stock levels at say ... microcenter right now. TONS of Ryzen CPU's with no graphics. Tons of GT (not GTX) level graphics cards.

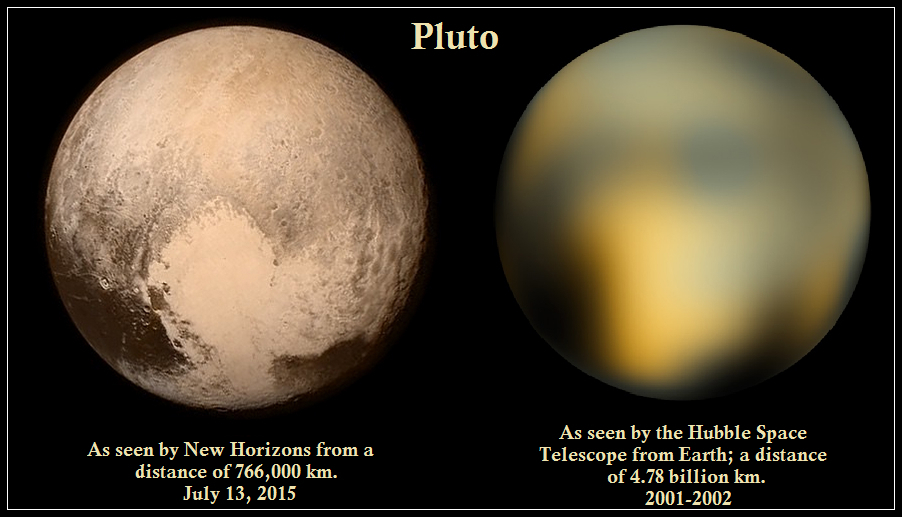

@tikker To see how you are wrong demonstrated with observation. You mentioned that with computing power more information can be extracted from a Hubble image. That is similar to this image taken of Pluto with Hubble before the New Horizons space craft visited it.

The image on the right is that super resolution you are going on about. Compare that to an image taken up close, astronomically speaking, where the angular resolution of the camera allows discernment of features barely hinted at. You sitting in front of your monitor are NOT seeing something like what is on the right.

_____Some general comments and my final realization ________

The idea that you need 4k AND 120hz AND ray traced global illumination to game is just pure marketing hogwash. All of those features are really only useful for AI, high fidelity physics simulations.... research grade simulations... and other such task. Most games barely scratch the surface of them and don't do so very well.

For games what matters more is a smooth real time experience. Turn down the resolution and sit farther from the screen and you get that.

The new reality right now, for most people, is if you seek to game on PC these are the real options.

- Just buy a laptop with a good APU (NEVER to be confused with an intel IGP maybe their newest graphics maybe).

- Just buy a pre built *See video from ETA prime about gaming on a Ryzen 4700g based pre built. Plus notice it has an expansion slot. One could add a GPU if they can get one. (4) Cheapest Ryzen 4700G Prebuilt PC - Outstanding Performance From This APU! - YouTube

-

Just buy what parts are available BUT with an eye towards upgrading to what is next if you can. This would be much more viable if we weren't at EOL for socket AM4.

- Given what is actually available and obtainable in stock, in stores, that means gaming on a GT710 or GT1030.

Then you can if you get lucky upgrade. You can live in hopes and dreams or you can live in reality. Just keep it real with yourself ... wanting to get a RTX 30 series is like trying to date a movie star, or win a lottery.

As for the idea that I am not into building computers. I built one at the start of the pandemic.

The Stimulus Payment Build, Putting It Together. - Build Logs - Linus Tech Tips See here.

I have been using computers since the 1980's and gaming on them since before the movie WarGames was new. Now if you are a real OG sweet PC gamer you know where i'm coming from. The idea that a GPU is really a requirement is a new innovation that was true for about 15 years ... but by necessity just can't be true anymore.

.thumb.jpg.ab6821c090888206ddcf98bb04736c47.jpg)

Create an account or sign in to comment

You need to be a member in order to leave a comment

Create an account

Sign up for a new account in our community. It's easy!

Register a new accountSign in

Already have an account? Sign in here.

Sign In Now