So apparently, the Intel/Radeon marriage is a thing that's happening

QuoteThe new product, which will be part of our 8th Gen Intel Core family, brings together our high-performing Intel Core H-series processor, second generation High Bandwidth Memory (HBM2) and a custom-to-Intel third-party discrete graphics chip from AMD’s Radeon Technologies Group* – all in a single processor package.

It’s a prime example of hardware and software innovations intersecting to create something amazing that fills a unique market gap. Helping to deliver on our vision for this new class of product, we worked with the team at AMD’s Radeon Technologies Group. In close collaboration, we designed a new semi-custom graphics chip, which means this is also a great example of how we can compete and work together, ultimately delivering innovation that is good for consumers.

So this was the semi-custom design AMD talked about a while back. But it has happened. Here's Intel's video:

So yeah. We now have an Intel CPU with an AMD RTG GPU in the 35-55W TDP range.

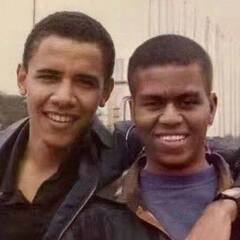

Here's how they did that:

That's a single HBM2 stack so we're likely limited to 4GB of video RAM. The details of the AMD GPU are unknown at this point, likely to be a Vega-based GPU, but things as SP count and clock speeds are not known yet.

.thumb.png.b3167199d731d1ef4c7a3db9c011ce01.png)

Create an account or sign in to comment

You need to be a member in order to leave a comment

Create an account

Sign up for a new account in our community. It's easy!

Register a new accountSign in

Already have an account? Sign in here.

Sign In Now