Rumor: AMD to go LGA with AM5, PCIe Gen 5 limited to only EPYC CPUs for Zen 4 Update: More leaks

Go to solution

Solved by Random_Person1234,

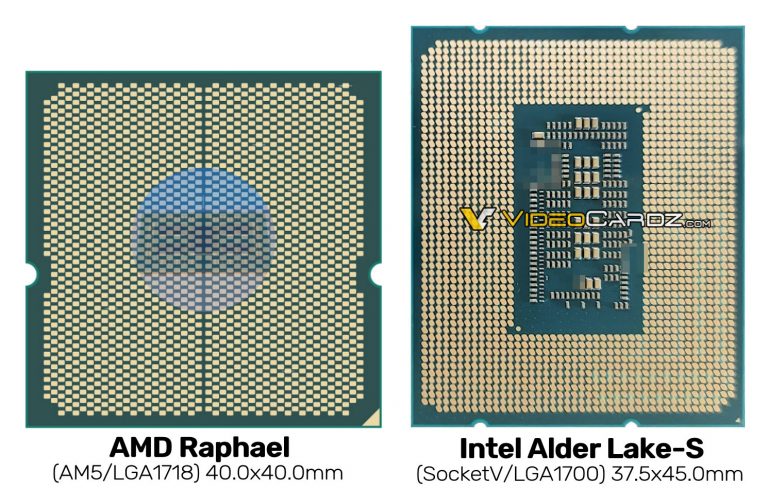

Update: Executable Fix has now leaked more stuff. Zen 4 Raphael (first consumer Zen 4 chip) will support DDR5 (as expected), but unlike Intel Alder Lake, it will not also support DDR4. Raphael will have 28 PCIe 4.0 lanes, up from Zen 3's 24. The chips will have a 120W TDP, with a 170W special variant also possible. Executable Fix also leaked a picture of the LGA 1718 pads on the CPU.

Here it is compared to LGA 1700 (Alder Lake socket):

Spoiler

Create an account or sign in to comment

You need to be a member in order to leave a comment

Create an account

Sign up for a new account in our community. It's easy!

Register a new accountSign in

Already have an account? Sign in here.

Sign In Now