-

Posts

5,941 -

Joined

-

Last visited

Awards

This user doesn't have any awards

About MageTank

- Birthday October 27

Contact Methods

-

Battle.net

MageTank#11790

Profile Information

-

Gender

Male

-

Location

United States

-

Interests

Gaming, Computer Hardware

-

Occupation

Slim Jim Enthusiast

-

Member title

Fully Stable

System

-

CPU

Core i7 8700k 5.4ghz Cinebench Stable (best kind of stable)

-

Motherboard

ASRock Z370 Fatality K6

-

RAM

4x8GB Patriot Viper Steel DDR4 4400 C19 (Clocked at 4000 C15-15-15-30-2, 36ns latency)

-

GPU

EVGA RTX 2080 Ti Black Edition XC

-

Case

Thermaltake Core P3

-

Storage

Intel 2TB 660P M.2 NVMe SSD

-

PSU

EVGA 850W Supernova G2

-

Display(s)

LG OLED B9 55" 4k 120hz G-Sync TV

-

Cooling

Decent Sized Custom Loop

-

Keyboard

Logitech G Pro TKL

-

Mouse

Logitech G703

-

Sound

Sennheiser Game One

-

Operating System

Windows 10 Pro

-

Laptop

PowerSpec 1510 (Clevo P650HS-G) w/ 120hz G-Sync panel

Recent Profile Visitors

14,751 profile views

MageTank's Achievements

-

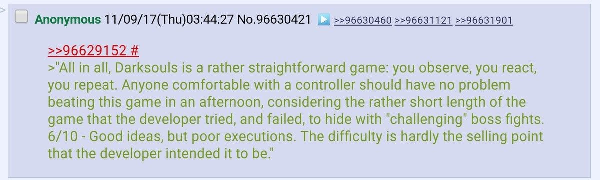

Gamers Nexus alleges LMG has insufficient ethics and integrity

MageTank replied to osgalaxy's topic in General Discussion

Yeah, I think his testing methodology itself was flawless, I just find the scope weird. If you want to point out throttling in Blender, that's perfectly fine, but I'd also prefer to see some actual game performance. Then the conclusion could be "Buy this if you only want to game, but avoid this if you need to do heavy CPU rendering on the side". Most of the people that I would refer to review channels online are mostly just gamers, not people educated enough to draw their own conclusions. While I personally water cool all my stuff, I don't see pairing a 5600X with a stock cooler as a bad thing. Steve mentioned in video that it is a 65w processor, and the AMD stealth cooler (assuming that is what it was based on pictures/video) is rated for 65W. The fact that it maintained a 300mhz boost on that stock cooler under Blender is impressive by itself, lol. I am assuming Steve would tell people to refer to the 3060 review if they want gaming performance, but if the throttling is such a concern, I'd like to see that explored in the video itself, especially since the title of the video also refers to the system as a Gaming PC. Would have also been great to see if the extra $20 he spent on the replaced cooler improved FPS and if so, by how much? Would help inform buyers that paying X percentage more results in Y percentage performance boost. -

Gamers Nexus alleges LMG has insufficient ethics and integrity

MageTank replied to osgalaxy's topic in General Discussion

Yeah, I just watched a video from GN last week about a prebuilt PC for $800 from Micro Center. Dude went in on it being a bad design and thermal throttling under Blender, and came to the conclusion that it was a bad gaming PC. Problem is, I saw zero gaming benchmarks in the video about the gaming PC. I consider him knowledgeable, and his methodology was flawless in that video, but he seems to have issues with the scope of his videos. It makes it hard for me to recommend them as a "review channel" when people won't get relevant review information. This pales in comparison to the scale of issues he pointed out about LMG, but it's still something he himself would have to acknowledge to avoid being hypocritical with his push on ethical testing and scope. -

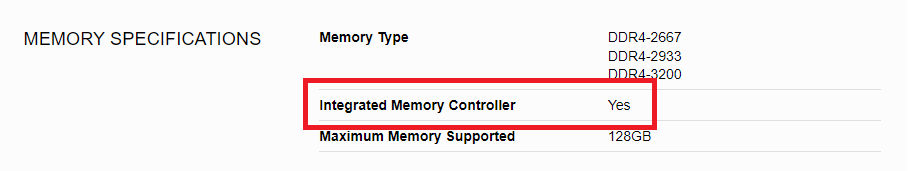

Comprehensive Memory Overclocking Guide

MageTank replied to MageTank's topic in CPUs, Motherboards, and Memory

It does, but to a lesser extent. When I used skylake, I didn't exceed 1.15v on either rail, but once I got my OC dialed in, both were stable at 950mv. VCCSA tends to scale a little better though, so having it be higher than VCCIO wouldn't be uncommon, just make sure you avoid pushing too high. Most of the people recommending 1.3-1.35v on VCCSA back then had no idea about IMC longevity on Skylake, they were just taking a guess at it, lol. As for the BIOS, newest isn't always the best, especially when it comes to memory stability. If it supports rolling back, might be worth trying a different BIOS from an earlier version. I don't know if people discuss BIOS testing when it comes to memory OC on LTT, but the OCN forums might have older threads where this was investigated on the Intel CPU's sub-forum. -

Comprehensive Memory Overclocking Guide

MageTank replied to MageTank's topic in CPUs, Motherboards, and Memory

VCCIO isn't something that provides stability the higher it gets. It's actually very sensitive to "voltage holes" where certain values (both low or high) are simply unstable. For example: I've had scenarios where 950mv was stable but 1000mv wasn't. 50mv difference resulted in complete instability. 1050 was just as stable as 950. I do not like the modern trend of throwing more VCCIO/VCCSA at things for stability, but I am seeing it far more often with most memory overclockers these days. My testing just doesn't show good scaling. The same can be said with VDDP on AMD. If you pay attention to AM5 and the latest 1.0.0.7B AGESA firmware, they actually lowered VDDP from 1.15V down to .9V on most boards because vendors were throwing way too much voltage at XMP kits, resulting in instability and higher boot times. People attributed faster boots to MCR, but it was actually the VDDP voltage. For your issue in particular, might be worth trying a different BIOS. The noise you are hearing can be caused by impedance which would explain why VCCIO is impacting the noise. I would also recommend experimenting with lower VCCIO voltages, I promise you can find stability just as easily with less voltage. -

I normally use a heating element or heating iron and sit the processor on top of it, but those tools are in my home lab, not my work lab, lol. Ryzen in general is a little trickier to delid compared to Intel and it just isn't worth it thermally speaking. Having to use a thin razer to break the adhesion without scratching the substrate, making sure not to move the die too far to avoid SMDs and making sure it's warm enough to avoid cracking the die as you mentioned previously. I know people that skip the heating step, but I've only had about an 80% success rate without heat, and (aside from this hilariously botched job) a near 100% success rate with some heat. Just don't use a cheap $20 heat gun and handheld thermocouples lol. As for fixing the pins, I am strongly considering it. None of them are actually "bent", they are hanging freely. The solder pads still look very clean, I imagine if I heat the rest, completely remove them and find myself a jig to line up the pins, I could probably fix this.

-

Buddy, that was heat that made the pins fall off, the processor was completely suspended lol. The cheap heatgun we have doesn't have temperature control, it's simply a twisty nob that says "warm" and "hot". Used a thermal couple to measure surface temps to roughly 314F or 156C (melting point of indium) but it turns out that either the probe was inaccurate or the surface temp didn't reflect actual temp of the substrate. Pins started to fall off, en masse, lol. I am quite experienced with delidding. I'd wager that I've delidded more processors than most people in this world, this is just the consequences of rushing for the sake of science. I'll still bask in its crispy glory any day of the week.

-

@ShimejiiI did in fact learn something from delidding this processor. Too much heat = bad time. I did manage to get some testing done before I did the deed. Very impressed with it, would make a good ITX chip for sure. I didn't see a "limit 1 per customer" thing on the website so... brb.

-

You ain't my dad. I am gonna delid two now just because.

-

I don't know. If I were playing Elden Ring with AI enabled while wearing the sunbro armor and all of a sudden Solaire invaded, yelled "420 Praise It" and defeated a boss with me, that'd be pretty sweet. I'd sacrifice some British melancholy for that.

-

Oh, I wasn't gonna delid for performance. I just genuinely want to see the CCD configuration to confirm these match a 5800X3D. Nobody else is dumb enough to delid a limited edition processor, so someone has to do it. I am about 99% confident I'll break it. My last Ryzen delid didn't go so well, lol.

-

With how limited these limited limits are, I want to buy one and delid it, but if I kill it, someone somewhere in the world is gonna yell at me.

-

Had to do a double-take on this one. Looks like there is a new 5000 series processor launching and oddly enough, it's Micro Center exclusive. I saw the leaks about this one, but this seems to confirm it: My thoughts I originally saw the processor listed on the Micro Center store page (was looking for something to throw in a new ITX build). My favorite spec from the processor has to be this one: Very curious as to why AMD is launching this so late in the game. Would be quite an upgrade for anyone on Zen 1 or 2, that's for sure, but I imagine this would be cutting into their potential 7000 series sales, especially since you can find AM4 boards and DDR4 boards much cheaper than AM5/DDR5. Sources https://community.microcenter.com/discussion/13402/micro-center-unveils-exclusive-launch-of-amd-ryzen-5-5600x3d-processor https://www.microcenter.com/product/667765/amd-ryzen-5-5600x3d-vermeer-am4-33ghz-6-core-boxed-processor-heatsink-not-included Also, been a long time since I did a tech news post. Whoever did the template, high five.

-

-

EU ban on non-removable laptop and smartphone(?) batteries

MageTank replied to jboman1980's topic in Tech News

Where do I find one of these rural villages with smart phones that need battery repairs but also do not have stores that sell tools? Sounds like a very niche village, lol. "We have modern technologies, electricity, cellular towers and smart phones, but we draw the line at having hardware and convenience stores". Also, the western world still has to travel for a Wal Mart. If you live in Gilbert WV, you gotta travel 30 miles to Logan just to get to the nearest Wal Mart. God forbid you want pizza that doesn't taste like greasy cardboard... Might as well fly a helicopter to the nearest bordering state, lol.

.png)