-

Posts

635 -

Joined

-

Last visited

Content Type

Forums

Status Updates

Blogs

Events

Gallery

Downloads

Store Home

Posts posted by Favebook

-

-

Is anyone else experiencing the problem where some Einstein tasks just get stuck at 99% and do not want to process further and boinc won't download new ones unless I process those first. Like I have to babysit 4 of my systems. I am thinking of just giving up on Einstein tbh. This is happening on 4 different configurations with similar GPUs (2080/2080S).

-

6 hours ago, GOTSpectrum said:

Yeah, primegrid is the GPU task, you may need to edit your cc_config file to let it use multiple GPUs though.

Looks like I can't add WCG for some reason so guess I won't be helping with the jav throw

By default, on one of my systems, PrimeGrid uses 2 out of 2 GPUs but does not utilize them fully. Do you know where I can find a guide on editing cc_config files to use them fully and efficiently? I just spent about 30min searching for it but couldn't find it. I remember setting up my app_config file for WCG but I do not remember anything about PrimeGrid.

-

Is there no easy way to bunker some projects while still uploading others? It's been long since I've done this. I guess it is not possible with single instance of BOINC manager, am I right?

-

On 4/28/2021 at 2:19 PM, leadeater said:

Crunch_4Science

Can we add this to the OP?

- GOTSpectrum and leadeater

-

2

2

-

2 hours ago, FakeKGB said:

That sucks, because it's causing my games to crash/lag.

Decrease your clocks and it will be stable.

-

So, in how many hours/minutes does event start exactly? Are we using GMT?

-

Who do I contact in order to donate to prize pool? @GOTSpectrum

-

4 hours ago, Den-Fi said:

Yeah, I messaged them a day before making this.

Haven't heard back yet, so I figured I'd see if anyone else has been IP banned from there in the meantime.

I had one of my networks (which I usually use VPN with for torrents), blacklisted on their website after accessing it for a few times (without VPN or active torrents).

As I do not use that network often, I just forgot about it and didn't even contact him. After 3-6 months later when I tried again with that network, it worked fine.

He probably has an automated system for that.

-

10 minutes ago, YellowJersey said:

I just did a clean OS install and then reinstalled F@H. It's working now. Probably didn't need to reinstall the OS...

Hahahah, most likely, you did not, but well, if you didn't have any important settings or apps configured, you are good to go. If I were to reinstall my OS, I'd need like a month to set up everything the way it was.

-

11 hours ago, YellowJersey said:

That's the weird part. It's been running fine since March with just default settings. I haven't changed anything and it's started doing this. I even tried uninstalling and then installing the latest client, but to no avail.

Have you deleted the previous config before reinstalling?

Can you try deleting all slots, exiting F@H, killing it in task manager and then starting it up again?

-

2 hours ago, YellowJersey said:

For F@H, what does it mean when it says it's waiting on "FaHCore Run?"

Could be badly configured F@H. What have you done before you experienced this?

-

1 minute ago, LazyDev said:

I've not been able to get it to work for over a month, possibly due to a conflict between iCue and HWinfo.

This is a big problem for me too. If I want to run HWinfo, I have to reboot my PC after using it if I want to use my iCue again. I lose almost all functionality from iCue when I run HWinfo.

-

13 hours ago, Happiness_is_Key said:

Looking back at that now, I'm not sure what I meant. You got me. My apologies. I guess I forgot about Bitcoin having money value while typing all of that. Hahahaha, just one of those days. I'll strikethrough that in an edit.

No worries, you forgot about "only" 350 billion $, so, nothing serious.

-

42 minutes ago, Happiness_is_Key said:

That's neat. Yes, understood. I just want to make sure that the efforts are going toward something that benefits something rather than consuming a lot of electricity and computer resources for just points (no offense Bitcoin people

). While that is fun, I'd rather help people with my resources. That's the only reason why I ask

). While that is fun, I'd rather help people with my resources. That's the only reason why I ask

Not sure I understand this reference. Bitcoin is not "just point(s)". Bitcoin has value.

-

-

15 hours ago, LazyDev said:

Don't know if it's my system or what, but this error is something that does crop up from time to time.

Just a regular shortage of WUs error. Server has no WUs to give you at the time. But be sure to check if you have internet connection anyway, ISPNs can crap their bad sometimes.

-

11 minutes ago, shorttack said:

Found your sight last weekend and joined immediately. Giving you 3090, 3080, and 2080 super clients.

What I can't explain is why my clients are doing so much better than your average clients? The 3090 is doing 51.5% better than the database average on project 13428, yet my machine is an Ivy Bridge_E 4-core from maybe eight years ago. However, almost all my clients run Linux Mint 19. My Windows testing never came near to a 50% Windows penalty.

Any ideas? Any thoughts for new features that allowed you access to folding client platform data?

Probably due to linux. Also, those are new cards and there isn't a ton of data on them yet, that is why they might vary a lot in PPD.

Linux does give you better PPD, that is for sure, but I wouldn't say every unit is 50%+ better than on windows.

-

10 hours ago, LazyDev said:

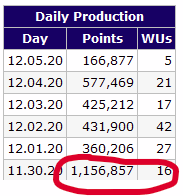

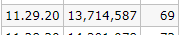

So far, the 2060's have not stopped at all within the past 24 hours since I dropped the power limit. The overclock was hit and miss in terms of the core restarting, and most of the time it was not an issue. Today's numbers are impressive, considering I dropped 300 watts off the total system power draw. Something my bank account will love.

Even more impressive is (Not the 69 work units completed) that I'm catching up with you

Avoid having core restarting, it is much better for your PPD than overclocking. I learned it the hard way back in 2018. If you aim to fold all year around, consider putting slight overclock and dropping the power limit.

You can even see my only 2080 Super with 55% PL and small OC (+30CC/+250MC) has been stably folding since event ended for exactly 2.73M and it is using a fraction of power that it used during an event when I OC-ed it to 105% PL same OC and it did between 2.8-3.2M PPD.

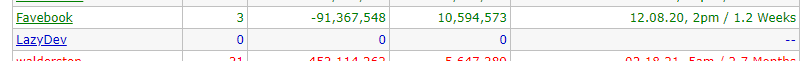

Ah, yes, all of you who will pass me this year will have a hard job keeping your spots during next year. All my GPUs are currently mining to recuperate from folding months bill. Also, I'll have to ask Jason from EoC to start accepting crypto donations, as converting crypto to PayPal is a hassle nowadays and using credit card to donate money to PayPal is not something I am into. Their fees and conversion rates are absurd.

-

20 minutes ago, yaboistar said:

Heh, enjoy your rank ups while you can, I gotta cover some electricity with my cards. Do not worry, I will catch you next year.

-

36 minutes ago, LazyDev said:

My 2060's seems to be very stable with the 125MHz overclock on the core, and 800 on the memory with a power limit of 78%. Still getting around 1.8M PPD on these, despite dropping 200 watts on use. Ironically, the core restarts have stopped, which was caused by one of the 2060's. I have set a custom fan curve, as the default curve wasn't sufficient enough to keep the temperatures down. My system just sounds like gentle wind, for those wondering if this system was loud.

Will continue to monitor, but I'm already liking the results of the lower power limits.

I wouldn't really call it stable, you can see that your 2060 (folding slot 3) had 2 core restarts. One of those luckily was a dropped WU, but you probably lost a chunk of folded % and had to redo it. However, the first restart actually dropped a WU totally and you had to download new one. Now, I cannot know without full logs how much time those 2 restarts took out of folding but I can surely say that that was not worth it. The PPD you get from OC-ing is miserably low compared to risk of dropping a WU.

You did have luck in last few days (if you have ran same settings during that time). But those 2 restarts (on 25.11. and 26.11.) are not something you should aim for. Card may be stable on most WUs, but some will fail for sure. +125 CC is quite a lot imho, you should be happy if it has been working with that OC for so many days, especially if it is stable in benchmarks/games, but for Folding, I'd recommend you to drop it to at least +80 if not lower.

-

2 minutes ago, Gorgon said:

Are you adding a modest overclock - +50-75?

Im using +50

Yeah, forgot to mention, I am using + 20-60 CC and +100-300 MC depending on card and it's performance (read: stability) in benchmarks, mining and F@H.

- Gorgon, WhitetailAni and GOTSpectrum

-

3

3

-

1 hour ago, Gorgon said:

How low can you go with Power Limits without losing efficency?

The answer appears to be - All the way.

Here I'm transitioning from 80% FE Power Limits to the GPUs minimum:

-snip-

So a further 27% reduction in power for only a 12% reduction in PPD. I'm going to let this run for a while but so far no issues.

On 4 of my 2080 Super, I cannot go lower than 48% PL. @ 100-112% PL they do 3-3.1M, @ 50-100% PL they do 2.5-2.9M.

Once I go below 48% PL core clock drops from 1650 (50% PL) down to 1200-1400 CC, which drops PPD down to 1.7-2.1M.

Lowest I can go is 42% PL on these cards and it drops down CC to 900-1050 and I haven't tested PPD but I'd say 1.2-1.7M.- WhitetailAni and marknd59

-

1

1

-

1

1

-

-

- WhitetailAni and rodarkone

-

1

1

-

.png) 1

1

.png)

BOINC Pentathlon 2021

in Folding@home, Boinc, and Coin Mining

Posted

Once you set it up in preferences, you have to click "update" button on every boinc client.