-

Posts

43 -

Joined

-

Last visited

Awards

This user doesn't have any awards

Contact Methods

- Website URL

Profile Information

-

Gender

Female

-

Location

Kassel & Frankfurt a.M., Germany

-

Interests

Server & Datacenter Infra + Building these Datacenters

-

Biography

IT & Tech Nerd

-

Occupation

Datacenter Engineer @ NTT GDC

Recent Profile Visitors

1,057 profile views

zENjA's Achievements

-

I *JUST* upgraded.. I HATE it when this happens…

zENjA replied to AdamFromLTT's topic in LTT Releases

Maybe I'm oldschool, but I think "more speed" is not everything. I would love to step down my PCIe Nx4x NVMe U.2 Server to an PCIe 5 1x and then add 36x SSDs in 1U. or it's just me, who is dealing with servers all day -

Everyone said this was impossible - Backyard Fiber Run

zENjA replied to jakkuh_t's topic in LTT Releases

I build datacenters (megawatt scale) and I love Singlemode Fiber. The main question for me... isn't 12/24/48/96/144/288/864 Fibers in a run default in US/CA? I guess, that you get this cable to put in in underground piping later... also... I guess you just preorder the terminated cable, because of conviniance. (I normally just spice my own cabels) DAC Spitter cabels... normally you go to the CLI and set it to slpit mode - at least, that I need to do on my mellanox hardware. Did you see, that there are singlewave BiDi 100G QSFP28 available? Flexoptix here in germany have them in stock -> Q.B161HG.10.AD + Q.B161HG.10.DA (Joking) you tell me 3x 400G? Let me introduce you to DWDM -

For power testing -> build youself a 3 Phase Braker box with like...2 Outlets per phase... and monitor it with a Shelly 3EM. I've used two of these in my Datacenter at work to get monitoring data for a A&B Line for 80A 3p in a fixed install. If you have something else... I could create a quick wireing diagram, so someone could cable a box for that. So maybe this could be a qick idea to test efficiany between 120v & 240v - or 400v if your power provider is nice to you. Maybe I just have a verry german view on power infra... Yes... you have gotten a psu tester for that... I know, but this would be a small box, that you could just throw on the table and go testing.

-

I use a TV (Samsung GQ55QN95A) - 4k HDR10+ & HLG

-

I think the Slide at 1:44 have an error - the scale is... strange Cinebench R23? My AMD 3700u gave me 3146 in R23 Multicore. Like... i5-8259U | R20 - 1641 | R23 - 4206 So I guess its: A: a R20 Rating with a missing 0 in the scale B: a R23 Rating and the scale is "just for comparison"

-

For testing, I thought about connecting two external TB3 "GPU" Box, but put 40/100G Mellanox cards in them. Yes, I know iperf is CPU bound on most systems I know at multi 40g or higher systems May I see my DC Customers bringing these mini mac's in as a cluster... would be interesting

-

"15TB... more that your total household..." Datahorder... Hold my Ingest Drive...

-

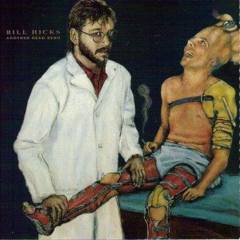

zENjA changed their profile photo

-

Yes, as i guess it's a "DELL/EMC" OEM System -> then it's a handel to carry / pull the server back into the rack from behind. (I as DC Tech like this )

-

I've a shadow since they started. The streaming quality is ok, but I've found several downsides for me: - my Hardware have no hardware decode for it - min. Intel Core i 3000 Series needed - to less ram & cpu for workloads without GPU support - single monitor support - mouse support on iOS - no IPv6 Support I've also get the ghost, yes it's ok, but a base i3 NUC is more versitale - I just need a min. 3 Monitors at 1440p. I think it's a grade service for 95% of gamers (with the right internet uplink), but I'm to much of a heavy user.(I just have 3 GPUs in my System ATI v7900 just for 4x1440p, GTX1060 3x1440p to do stuff, RTX2070super 1x1440p for compute & to less gaming at the moment - It's all on a P9D-WS - compute is handeled by real servers in my garage rack)

-

Their devices are verry cool. 1280x800 is fine, its 16:10 that I like and I've the same resolutuion in my 12" Thinkpad x201. I've seen their devices with a lot of customer datacenter techs guys and I ask them why: - small & light - real PC - no touch - "high" resolution screen - for the size - real storage - do real work - all IO they really need like ethernet, full sized USB & USB-C - Linux/BSD etc. support - no strange T2 chip, locked down bios etc. - some even run VM's on it (native Linux and their company Windows in a VM) - some told me they where wating for one with Thunderbold - they want to connect with an Sonnet Solo 10G SFP+ Adapter for Network testing & troubleshooting I've heard they call it (the Pocket 2) the EEEPC they ever wanted and for watching video and writing some mails, they also carry an ipad. So two light devices with long battery live and easy charging.

-

I'm running my handy x201 with 8gb - thats ok. On my work laptop where AV, Webex etc. blode is installed & starts automaticly - because IT says... 8gb is at or beyond the limit. On my "desktop" Thinkpad W520 16GB is fine, mostly unless I do something heavy like having Firefox, Opera, Outlook, large Visio, MS Project open at the same time. Than I'm at 14-full RAM use - normally ~10-12gb. In a comparaison between my w520 (16GB - 4x DDR3), a NUC8 i3 with 32gb (2x DDR4) and my Testsystem Asus P9D-WS, E3-1231v3, 32GB (4x DDR3 ECC) the w520 feels "slower" but thats bostly the fault of the GPU. On the working end, I need way more. Multible older servers (full spec - ok, 2x E56** 6c) with 48gb+ and one main host with 768gb is fine. Ok, I'm doing loadtesting for customers on their clusters ath the moment - nothing for "normal" scale.

-

TD:DR Yes your Serverroom is really small to have a Rack, Switch Box and a UPS in there. Cabeling Think about zero u PDU's with C13/14 or even C19/20 outlets for your servers. Some also can be switched and/or monitored. Also have 2, A from Grid & B from the UPS, as it's not your scale to have two UPS Systems. Get an ATS Switch for single PSU devices, so they could benefit from both sides. Also you could set the UPS to 220-230V to get better efficiancy out of your server PSU's. If you only use the PDU Outlets, you could not plug a 120v device in by accident, because the plugs are different. Long version: I plan/build/operate colocation Datacenters at a scale of 90-120MW per site / ~5-15MW per Building. It's like "Unboxing Canada's BIGGEST Supercomputer!" but at scale. UPS Systems at scale, sometimes have problems, but they should have internal redundancy and not be loaded over 45% and for efficiancy not under 30%. I believe that you have get a double conversion UPS, so you could install an ATS or STS Switch between the powerwallbox and your UPS. This way you could switch the powersource of the UPS from Grid to Backup Generator if needed. Don't know how often this happens in your area, but here in germany I only had one unplaned outage for 4min at home this year (+ one announced for maintanance). In the DC we normally have not outages, because of the 110kv / 3 Transformer n+1 setup, but we get all shit that comes over the line, like spikes from lightnings or even get hit by lightnings. In that case it happened that all buildings disconnects themself from the side grid, run on UPS and starts their generators (mostly 16-24 Zylinders with ~35-70l of cubic capacity at 1,8-2,4MW of power per generator). Under full load they want ~ 500l / ~ 3 barrel of super light heating oil (like diesel) each per hour and we have at least enough onside to power them for 72h. Our UPS Systems get a refresh after 8 years, then the batterys "only" have 80-90% of capacity left. I've get some (18) ot these and have them connected to an aged Dell 5500kVA to get longer runtime. I use Yuasa NPL's and at work we use Yuasa SWL UPS Blocks. Mostly 56-60 in a row, to get high DC Voltages for the UPS, because it's more efficiancy and / and because this way, we get lower amp's and don't have to use "thick" cabels - ok they are still hand thick. All our UPS Systems are seperated from the battery blocks, they are in different rooms. At scale there is not only eaton, also Vertiv (Emerson Network Power), Schneider Electric (APC).

-

@RejZoR Yes, it's the naming is a bit strange. Same as SK hynix, samsung or hitachi/toshiba (in the Enterprise HDD market).

-

Jake types in his user/pw on a external system to give linus access to his share - please do not, the other person could catch your credentials. Create a seperate share & user for the person, who want to backup, also in the share config you can create quotas so your mate don't fill your nas full with his crap. I upload by "external" Backup to work, where I have racked my own server (verry old system). But at some friends, I've a Rasip with a usb hdd that openes a reverse SSH tunnel to me (it also could be a cheap vm) and mapps the ssh port there. Hyper Backup is able to do packupo over ssh, just use "rsync" as backup type. May you have to edit the parth to get it working, in my case I mount the hdd to "/home/$USER/backup". Even on some Webdav services that dont run out of the box just type in "/home" then you can run your backup.

-

Wow, may I replace my x201 / T410 / W520 for a E495 with this CPU. "Battery is rated for 8h" - did you have test results what to expect in reality - office, remote desktop, surfing youtube (all the non gaming tasks)?

.thumb.png.810b0cadf99ed51ccde87599e0146c03.png)