-

Posts

697 -

Joined

-

Last visited

Awards

This user doesn't have any awards

About maxtch

- Birthday Feb 11, 1993

Contact Methods

-

Twitter

@maxtch

- Website URL

Profile Information

-

Gender

Male

-

Location

Amherst, Massachusetts, USA

-

Occupation

Graduate student and TA at UMass Amherst

System

-

CPU

Intel Xeon X5675

-

Motherboard

HP Z400 Rev 2

-

RAM

3x Crucial 8GB DDR3-1600 ECC UDIMM

-

GPU

Galaxy GTX 1050 2GB

-

Case

HP Z400 Case

-

Storage

Crucial MX500 1TB

-

PSU

HP Z400 430W PSU

-

Display(s)

HP ZR22w 21.5-inch IPS

-

Cooling

HP Z400 CPU cooler

-

Keyboard

HP USB keyboard

-

Mouse

Dell Laser Mouse

-

Operating System

Windows 10 Pro

-

Phone

iPhone 12

Recent Profile Visitors

2,651 profile views

maxtch's Achievements

-

Genshin Impact always starts a new installation and new game in full screen mode and detects resolution automatically. For my setup (PNY RTX 3060 12GB + Dell P2415Q) means I get full screen 4K with all eye candies enabled for the initial sequence, with no way lowering the resolution or other graphics settings until I am done with the first tasks. That resulted in me having a crashing game stream, because my GPU became overloaded. Is it true that 4K resolution makes almost any game extremely demanding on the GPU?

-

maxtch changed their profile photo

-

So this is happening, my TrueNAS server has one CPU core pinned at 100% while all other cores are idling. How can I load balance across the cores? It is ingesting data from another spinning rust array storage server over rsync on NFS on 10GbE. TrueNAS does not support RDMA, which exacerbated this problem. Otherwise I would use NFS over RDMA for this connection, which will reduce CPU load greatly. System configuration: CPU: Intel Atom C3558 (4C/4T) Motherboard: Asrock Rack C3558D4I-4L RAM: 2x SKHynix 16GB DDR4-2133 ECC RDIMM = 32GB SSD: Kingston A400 120GB (boot SSD) HDD: 4x Hitachi 12TB (refurbished) in RAID-Z1 NIC: nVidia Mellanox ConnectX-3 MCX311A 10GbE

-

Budget (including currency): US$0. It is one RMA and some shifting parts around builds only Country: USA Games, programs or workloads that it will be used for: Spare computer. No specific tasks. System configuration: CPU: Intel Core i7-10700K Motherboard: Asus ROG Strix Z490-G Gaming Wi-Fi RAM: T-Force Vulcan Z DDR4-3200 16GB x2 CPU Cooler: Cooler Master MasterLiquid ML120L v2 RGB version Graphics: Sapphire Nitro RX 580 8GB SSD: Samsung PM981 M.2 22110 NVMe 960GB NIC: nVidia ConnectX-3 MCX311A 10GbE NIC + Brocade 10GBASE-SR transceiver PSU: Corsair CX750M Case: Corsair 220T RGB I went in to further investigate the reason why things are giving me problems and generated an unholy amount of rejects, turned out to be two problems: I mounted my CPU cooler with insufficient mounting pressure in my 4U cases and thermal paste, and The set of T-Force RAM I bought was faulty at 3200MHz speed. For the first problem I fixed it when moving that MSI platform back. And the second one is up to an RMA process. The RMA came back, and I moved the MSI board back to the rack case I initially had it in, and it has worked fine ever since. This means now I have a pile of no longer needed troubleshooting pieces, hence the title Wasted Efforts. This is still a pretty capable gaming and workstation build, but I just have no task assigned to it. Some things of note here: The BIOS of this Asus board has trouble booting Ubuntu through UEFI, and its onboard peripherals are not supported in macOS (Hackintosh.) This limited the usefulness of this machine for me, as I use a lot of UNIX. The front fans of the case is a good emulation of server cases if I spin the fans up. Too bad this motherboard lacked the PCIe slots for my spare Tesla K20x.

-

Well, for Ethernet, if you have multiple wired devices with open PCIe slots (desktop PCs) you can upgrade the LAN connection to 10Gbps using some cheap used Mellanox 10Gbps Ethernet cards and a relatively cheap TP-Link 10Gbps Ethernet switch.

-

Best way to test if latency is due to local network or not

maxtch replied to Elijah Kamski's topic in Networking

It can be their ISP too. Also certain ISP pairs have exceptionally bad connections. -

Effect of putting RTX 3060/3070 into PCI_E3: PCIe 4.0 x4...?

maxtch replied to NGamer's topic in Graphics Cards

Your GPU will run normally but you will see a drop in performance due to less bandwidth available to it. Unless you have something else that requires a lot of bandwidth (for example a high end network card - a dual-port 100Gb Ethernet card can easily eat up a whole PCIe 3.0 x16 interface) you usually should not do this. -

These are surface mount capacitors.

-

Textures can be coarse or fine. The finer the texture, the larger the VRAM usage.

-

Yup it is the HDD to blame. Get an SSD, preferably a M.2 NVMe drive and image your HDD into it (if you can afford an SSD the same size or larger than your HDD.) You can leave the HDD in your machine, but only for storing data not frequently accessed.

-

Retention clip snapped with my card in place, help!!

maxtch replied to turntechgodhead413's topic in Troubleshooting

If you can find the clip somewhere in your case, you can slip it back in from the bottom. However it should not matter much to run your GPU without that clip, as long as you have secured the PCI bracket properly. I have server motherboards that uses exclusively PCIe slots without those clips, and GPUs always ran properly in those slots. -

The first thing I see is your memory speed. You are leaving 50% of your memory interface performance on desk. Enable XMP first. Memory bandwidth is a critical point for overall system performance, as everything else usually has to transit through main memory when being moved from one device to another.

-

That 120mm AIO was not intended for the current case at all. It is too a reject from the troubleshooting process. I have three 4U industrial rack mount cases. Those cases are too small to work with traditional tower air coolers like Hyper 212. The best hope of putting a 11900K (or 10700K, or 9700K) in there was a 120mm liquid cooler in the only place where the whole case accepts a 120mm fan. In that mounting scheme the radiator is directly at the cool air inlet, which makes things slightly more workable. That cooler was taken out of one of such cases once I gave up on that 11900K platform and put the dual socket Xeon E5-2696v2 system back in.

-

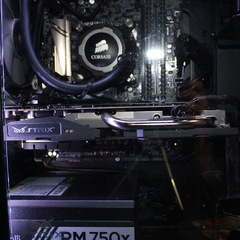

Budget (including currency): $200. This build is mostly a collection of parts that came out of other builds in the process of troubleshooting. Country: USA Games, programs or workloads that it will be used for: Backup unit, no specific workload planned. I was having weird thermal and USB compatibility issues among my main workstations. In the process of troubleshooting, I generated enough parts removed from those systems to be put together into a build. Since most parts are either rejects or bought used, I am calling this build The Question Mark Build, as the reliability of almost all important part here has question marks on it. This is actually my first ever build with either a transparent side panel or any significant amount of RGB. Parts list: CPU: Intel Core i9-11900K. This CPU may have a weak memory controller, as it seems to have reliability trouble with DDR4-3200 memory. MoBo: MSI Z590-A Pro. This motherboard seems to have signal integrity issues with its USB 3.2 ports. CPU Cooler: Cooler Master MasterLiquid ML120L v2 RGB. This is bought used. RAM: Corsair Vengeance DDR4-3000 clocked at DDR4-2933. This RAM set is otherwise fine, but the motherboard doesn't want to run it at 3000MHz without an BCLK overclock. GPU: Sapphire RX 580 Nitro 8GB. This GPU actually physically conflicts with the reinforce bar in my 4U rack cases. The case can live without that reinforce bar but operating it like that was suboptimal. SSD: Samsung PM981A 960GB M.2 22110 NVMe drive. This is bought used. This drive looks like server-grade SSDs, since it has ridiculous number of electrolytic capacitors on it. PSU: Corsair CX750M. NIC: nVidia Mellanox ConnectX-3 EN CX311A. This is bought used. (I do have an all-fiber 10Gbps home network and most of my computers have CX311A or CX312A cards. That can lead to interesting things as those cards have RDMA.) NIC: Intel AX200 Wi-Fi + Bluetooth card. Case + Fans: Corsair iCUE 220T.

-

Budget (including currency): US$2000 Country: USA Games, programs or workloads that it will be used for: Cities Skylines, KiCad and Eclipse CDT Other details: CPU: Intel i9-11900K Cooler: EVGA 120mm water cooled MoBo: MSI Z590 Pro-A RAM: Team Force DDR4-3200 16GB x2 kit GPU: PNY nVidia RTX 3060 LHR 12GB (was Sapphire AMD RX 480 8GB when initial built) SSD: Samsung PM981 1.8TB NVMe M.2 22110 server SSD HDD: 6TB disk image on my storage server over iSCSI + iSER. Storage server uses a 16-drive RAID-60 volume formatted as btrfs, shared by 3 computers. Wi-Fi + BT: Intel AX200 on PCIe adapter card Ethernet: nVidia Mellanox ConnectX-3 EN 10Gbps Ethernet + RoCE PCIe card + Brocade 10GBASE-SX fiber transceiver PSU: EVGA 750W full modular Case: Rosewill 4U rack mount case