-

Posts

17,188 -

Joined

-

Last visited

Reputation Activity

-

WereCat reacted to LAwLz in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

WereCat reacted to LAwLz in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

Yes but that's encoding speed. What I am talking about is encoding quality. AMD's video has historically looked significantly worse than Nvidia's.

AMD might encode more frames per second, but if those frames look bad then it doesn't matter that much.

If we are talking about HEVC, then AMD is waaaay behind Nvidia and Intel. AMD doesn't even support B-frames for HEVC.

Here is an example of what I'm talking about.

AMD's HEVC encoder needs around 8500Kbps of bitrate to look as good as Nvidia's video does at around 4200Kbps.

The video created by AMD's encoder ends up being roughly twice as big as the ones from Nvidia's and Intel's encoders at the same quality.

The example I showed above is kind of a worst case scenario and VCN is more competitive in some other scenarios (more specifically in scenarios with fewer scene transitions since AMD I don't think their HEVC encoder supports pre-analysis), but it's pretty much always more or less behind Nvidia and Intel in terms of quality at any given bitrate.

-

WereCat reacted to LAwLz in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

WereCat reacted to LAwLz in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

Seems promising.

Way too expensive for me to even consider these GPUs, but judging by the (very misleading and cherry picked) benchmarks from AMD I feel like they will probably win at price:performance compared to Nvidia's 40 series. And of course, these are the halo products so their price will be really high regardless. It'll be interesting to see what we get in the 200 to 400 dollar ranges though. I feel like that's what the majority of people actually buy, and that these super expensive cards are mostly marketing material.

I think it's great that they are not only including AV1 encoding, but also FINALLY working with third party developers to integrate their video encoder to programs. It is mind boggling that it took them this long to get it into OBS.

Let's just hope the performance (quality and speed) is good too. When it comes to video encoding AMD has been able to kind of match Nvidia on paper, but in real tests they have always been far behind.

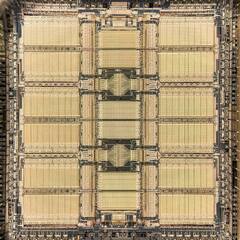

Kind of disappointed that they hyped up the chiplet design and then it turn out they are only using it for the infinity cache. But we are probably a few years away from doing compute chiplets.

I went over the Twitter comments and I am not sure if it's just Twitter being Twitter, but I feel like a lot of fanboys really like that AMD plays to the cheap seats, and that kind of rubs me the wrong way. I just looked at the LTT tweet and the corporate dick sucking going on it the comments is sickening.

-

-

WereCat reacted to Stahlmann in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

WereCat reacted to Stahlmann in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

Lol can't we just stay away from these stupid abbreviations. At this point it's just too confusing. Just keep it at the vertical pixel count and aspect ratio.

If they would have called it a 2160p 32:9 (or at least 2160p super-ultrawide) monitor EVERYONE would know what they're talking about.

-

.png) WereCat reacted to venomtail in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

WereCat reacted to venomtail in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

Naah XFX will probs just announce the XFX RX 7900 XTX Thixx 3 X MercX

-

.png) WereCat reacted to Spotty in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

WereCat reacted to Spotty in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

Has XFX announced the XFX RX 7900 XTX XXX card yet?

-

WereCat reacted to Master_Tonberry in MW2 benchmarks

WereCat reacted to Master_Tonberry in MW2 benchmarks

Here are various results from my 3 PCs - all at 1080p EXTREME settings - for context

7950x / RTX3090

5700g/ A770

5900x/ RX6900xt

-

WereCat reacted to Master_Tonberry in Can my system run COD MWII above 60fps at 1440p?

WereCat reacted to Master_Tonberry in Can my system run COD MWII above 60fps at 1440p?

Your CPU will probably be the biggest bottleneck.

-

WereCat reacted to Mister Woof in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

WereCat reacted to Mister Woof in AMD Announces 7900XTX $999, 7900XT $899, Arriving December 13

The 7900XT could outperform the 4090 and people would still buy the 4090..

Kind of how like the 6600, 6600XT, 6700XT, 6800XT, AND 6900XT exist and outperform their 3000 series counterparts but people still prefer the Nvidia cards.

-

WereCat reacted to bezza... in will running a live wallpaper with my integrated gpu effect my dedicated gpu while gaming

WereCat reacted to bezza... in will running a live wallpaper with my integrated gpu effect my dedicated gpu while gaming

ok cheers

-

WereCat reacted to Kisai in New EU Law Could Force Apple to Allow Other App Stores, Sideloading, and iMessage Interoperability

WereCat reacted to Kisai in New EU Law Could Force Apple to Allow Other App Stores, Sideloading, and iMessage Interoperability

Politicians shouldn't create regulations when they don't understand the technology.

Could the EU compel Apple to support RCS in iMessage? Yes. Would it be treated differently from the status quo? yes.

App stores, or Siri, unlikely.

What really needs to come down the pipe is a regulation compelling "indefinite perpetual license." eg, if I buy X app on Windows, I own it, and Microsoft, Adobe, AutoDesk, can not remove it for any reason, be it canceling the service or uninstalling it. If I decide to switch to an iPhone or iPad, and the same software is available, then I should not be forced to buy it a second or third time. If I buy it once, I am entitled to run it on any device that I own, under the same conditions I originally purchased it (eg, if bought a playstation game 20 years ago, then I'm entitled to any "emulated" version of it on any platform without having to buy it again. If the developer remakes the game, I'm only entitled to the remake if developer doesn't also have the emulated/native version of the original.)

To take an example SEGA has released versions of Sonic 1/2/3 for multiple platforms. If I own the original Cart, then I should be entitled to the same game inside "Sega Genesis Classics" , but also the same game inside "Sonic Origins" because the game is identical. You could make the argument that "Sonic Origins" is a different game, but it isn't.

On the flip side of that Final Fantasy VII "Remake" is a different game. If I own the PS1 or original 199x PC version, then I should be entitled to any of the "fixed" versions released of FF7 for the PC other than "Remake"

If I own MS Office 2016, or Adobe CS6 then I should be entitled to that version, forever. If I "upgrade" to a cloud version, then that perpetual license should be brought forward even to Creative Cloud 2022, because those products have fundamentally not changed. Any new software added to Cloud since purchasing CS6. No.

If I switch between Mac and PC, I should still have that license. If I switch to an iPad, I still have that license.

But under the way things work right now, if I buy a game on iOS, and were to later switch to Android. That entire investment in the Apple ecosystem of discarded. If I buy a game on Steam, I don't automatically get it on iOS, PS5 and Nintendo Switch. That's a problem, and an obstacle to changing platform, or even using multiple platforms.

-

WereCat reacted to Needfuldoer in Undervolting an old gtx 970 to save money?

WereCat reacted to Needfuldoer in Undervolting an old gtx 970 to save money?

IIRC, North American electric code says sustained load cannot exceed 80% of a circuit's rating. So 1500 watts sustained on a 15 amp 120v circuit. Most toaster ovens I've seen are around 800 watts.

When I run a generic office desktop with a Haswell i7, integrated graphics, and a boot SSD through the Kill-a-Watt, it settles into an idle draw of around 35 watts.

My Kaby Lake laptop draws around 4, measured with the same meter.

Is it worth buying a brand new laptop just to save electricity? Probably not. That 31 watt difference would take 32 hours to save 1 kilowatt-hour. Assuming a $0.25/kwh electric rate, that works out to just over 23,000 hours of run time to save the price of this cheesy Chromebook from Best Buy. You'd break even sometime in June 2025, assuming you left the PC running 24/7 and will do the same with the Chromebook.

-

WereCat got a reaction from Ottoman420 in is firefox a chrome based browser

WereCat got a reaction from Ottoman420 in is firefox a chrome based browser

because you have Google set as your default search engine

-

WereCat reacted to Needfuldoer in is firefox a chrome based browser

WereCat reacted to Needfuldoer in is firefox a chrome based browser

The search bar has absolutely nothing to do with the layout engine.

Google pays the Mozilla Foundation to have their search as the out-of-the-box default in Firefox. You can change it to whatever you want.

https://www.theverge.com/2020/8/15/21370020/mozilla-google-firefox-search-engine-browser

https://support.mozilla.org/en-US/kb/change-your-default-search-settings-firefox

-

WereCat got a reaction from Needfuldoer in is firefox a chrome based browser

WereCat got a reaction from Needfuldoer in is firefox a chrome based browser

because you have Google set as your default search engine

-

WereCat reacted to jaslion in will running a live wallpaper with my integrated gpu effect my dedicated gpu while gaming

WereCat reacted to jaslion in will running a live wallpaper with my integrated gpu effect my dedicated gpu while gaming

Depends on the program.

Wallpaper engine can be set to pause the background when entering a game in full screen.

If you have multiple screen it will of course not do that.

There will be a bit of a loss of performance when active

-

WereCat got a reaction from jaslion in will running a live wallpaper with my integrated gpu effect my dedicated gpu while gaming

WereCat got a reaction from jaslion in will running a live wallpaper with my integrated gpu effect my dedicated gpu while gaming

Yes. But not because of it being demanding but because of Windows Desktop Management... you will be absolutely required to play in a true Full Screen mode or you may encounter some weird stuttering issues. (unless the animated wallpaper stops being animated when in background)

The GPU usage depends from clock speed as well. A 15% to 40% GPU usage at 800MHz is way different than the same % usage at max clock speed. I'm assuming you're using the Wallpaper Engine in which case I strongly recommend to disable the MSAA or leave it at minimum since that's just eating performance for no good reason. If you need MSSA on a wallpaper then just get a wallpaper that's in better quality to begin with.

-

.png) WereCat got a reaction from g85222456 in Don't buy AMD laptop if you still don't know how they name their mobile processor

WereCat got a reaction from g85222456 in Don't buy AMD laptop if you still don't know how they name their mobile processor

But according to the legend all of the ones you mentioned are Zen 0 😄

-

WereCat reacted to WoodenMarker in if i purchase two packages of single 1x16 gb ram and put them in the same system will it work? if so will there be a performance decrease?

WereCat reacted to WoodenMarker in if i purchase two packages of single 1x16 gb ram and put them in the same system will it work? if so will there be a performance decrease?

If the ram is the same model, it should work just fine.

By default, they should run at standard jedec speeds dictated by the motherboard. If one dimm is slower than the other, you can run the sticks at the slower speed of the two.

-

WereCat reacted to Sleepy_Lazarus in 6900xt vs RTX 3080

WereCat reacted to Sleepy_Lazarus in 6900xt vs RTX 3080

Thank you so much to everyone who replied. You gave me the confidence boost I needed to go amd.

-

WereCat got a reaction from Sleepy_Lazarus in 6900xt vs RTX 3080

WereCat got a reaction from Sleepy_Lazarus in 6900xt vs RTX 3080

Honestly, you will be dealing with driver issues on both sides so just pick the card that makes most sense to you. The 6900X is definitely a lot better card for gaming than RTX 3080 as long as you don't care about RT and some of the NVIDIA features.

However I wouldn't get an AMD card if you do any work that's GPU accelerated (except video encoding) since most SW just uses CUDA and AMD has been doing some work to improve things but in this aspect they are still quite behind.

I've been on NVIDA since the 700 series on my main PC and I haven't had so many issues as I did in the last year. The Forza Horizon is still broken (texture issues), MWII update makes the game flicker like crazy, we had videos in browser glitching (still have this issue unless I force the browser to use OpenGL... since November of last year...), GSync was broken in some cases, Dolby Atmos was broken for months, etc...

-

WereCat reacted to RONOTHAN## in 6900xt vs RTX 3080

WereCat reacted to RONOTHAN## in 6900xt vs RTX 3080

I've used both as of pretty recent, the drivers on Nvidia are better but not significantly better. Both are definitely usable, the AMD drivers just have a few quirks with them (in driver update utility was broken last time I checked, the driver has the tendency to crash if you have an unstable overclock, etc.).

If you don't need any of the Nvidia specific features (NVENC, CUDA, etc.), I'd be using the 6900 XT. In my testing with both the cards at 4K (I daily a 4K panel so it was the testing relevant to me at the time), with both cards running a maxed out overclocked (the 6900 XT has a fair bit of overclocking headroom on it, got an extra 10-12% performance with an overclock) and about 6 months ago (driver revision affects performance a lot in some games, so figured it was worth mentioning), the 6900 XT was at worst, 5% behind, and at best was closer to 10% ahead. That includes ray tracing as well, where for the most part a heavily overclocked 6900 XT was about on par with the 3080. When you step down in resolution, RDNA2 tends to do even better, so if you're playing at 1440P I'd expect those numbers to look a better for the AMD card. Haven't really noticed frame times be significantly better or worse on one or the other, so I'd just take that to mean AMD's drivers were a little rough for the first month or two (that does tend to happen with AMD cards).

You can't go wrong with either of these cards, they're both phenomenal GPUs, but the 6900 XT is the one that IMO makes the most sense here. It would be the fastest card at the resolution you're planning to play at, and it's a little cheaper as well. The one I ended up keeping was the AMD card (it's in the system I'm currently typing this up on), so take that for what you will.

-

.png) WereCat got a reaction from soldier_ph in Apple - allegedly - caught nerfing ANC Performance on their Headphones

WereCat got a reaction from soldier_ph in Apple - allegedly - caught nerfing ANC Performance on their Headphones

So if you have Android phone with Apple headphones you're safe because you can't update them? 😄

-

WereCat got a reaction from Evie Struggles With Life in Reflex ON or ON+Boost?

WereCat got a reaction from Evie Struggles With Life in Reflex ON or ON+Boost?

The boost helps with input latency if you're CPU limited.

-

.png) WereCat got a reaction from Kilrah in Don't buy AMD laptop if you still don't know how they name their mobile processor

WereCat got a reaction from Kilrah in Don't buy AMD laptop if you still don't know how they name their mobile processor

But according to the legend all of the ones you mentioned are Zen 0 😄