Explaining "Asynchronous Compute"

One of the more touted features of applications using DirectX 12 and Vulkan is "asynchronous compute." Over the years it's seemed to gain some mystique with people saying one thing or another creating a sort of stir of information. In this blog we'll go over what asynchronous compute is, where it came about from, and how NVIDIA and AMD handle it.

What exactly is asynchronous compute?

Instead of trying to find an answer from the interwebs as this term didn't really exist much before 2014 or so, let's look at the term itself, break it apart, and see what we can come up with. The "compute" part is easy, it's some kind of task that's computing something. The term itself suggests it's generic as well. So then comes the "asynchronous" part. This term has a few meanings in computer hardware and software:

- If hardware is synchronous, then its operations are driven by a clock cycle. Likewise, asynchronous hardware means that it's not driven by a clock cycle.

- In software, if an operation is synchronous, then each step is performed in order. If an operation is asynchronous, then tasks can be completed out of order.

Since it doesn't make sense that graphics would have a clock cycle associated with it, then the second definition applies. To expand further on this, an example task for an application to do is to load data from the hard drive. The application can then do the following:

- Pause until the hard drive came back with data, which could take a while.

- Set aside the request as a separate task, and when it comes to needing to read data from a hard drive, make the request and go to sleep. This way other tasks can run and when the data becomes available, do something with it as soon as possible.

In the first case, the application will ensure that the order of tasks is met, but this can cause the application to perform poorly because it won't do anything else. In the second case, this application won't ensure the order of tasks is met, but at the same time it can do things otherwise rather idle around.

Putting it together in the context of graphics cards, then it stands to reason that "asynchronous compute" is allowing the graphics card to do compute tasks out of some order so that the GPU can better utilize its resources. So what did the APIs do in order to enable this?

Multiple command queues to the rescue!

What DirectX 12[1] and Vulkan[2] introduced was multiple command queues to feed the GPU with work to do. To understand why this is necessary, let's look at the old way of doing things.

The irony of graphics rendering is that while we consider it to be a highly parallel operation, to determine the final color of the actual pixel is in fact, a serial operation.[3] And because it's a serial operation, the operations could be submitted into one queue. However as graphics got more and more advanced, more and more tasks were added to this queue. Still, the overall basic rendering operation was "render this thing from start to finish." Once the GeForce 8 and Radeon HD 2000 series introduced the idea of a GPU that can perform generic "compute" tasks, it was found that some operations could be offloaded to the compute pipeline rather than along with the graphics pipeline. One such technique that could be offloaded to compute shaders is ambient occlusion.[4] It can do this because only one type of output from the graphics pipeline is needed: depth buffers.

But this presents a problem now. Depth buffers are generated pretty early in the rendering pipeline which means that early on the GPU can work on the ambient occlusion any time up until needing to composite the final output. But with a single queue, there's no way for the GPU to squeeze this work in if the graphics pipeline has bubbles where resources are not being used.

To illustrate this further, let's have an example:

In this, there's ten resources that are available. The first three tasks take up 9 of them. But then the GPU looks at task 4, see it doesn't fit, and stops here. As noted before, actually rendering a pixel is a serial process and it's not a good idea to jump around in the queue to figure out what will fit. After all, what if task 5 is dependent on task 4? No assumptions can be made. But let's pretend task 5 isn't dependent, then how do we get this on the GPU if we can't re-order the queue? We make another.

The GPU will fill itself with Tasks 1-1, 1-2, and 1-3 like in the last example and when it sees Task 1-4 can't fit, it looks in Queue 2 and sees Task 2-1 can fit. Now the GPU has reached saturation. Although I'm not sure in reality if GPUs really issue work like this. The overall takeaway however is the introduction of additional queues allows for tasks to become independent and for the GPU to schedule its resources accordingly. So how do AMD and NVIDIA handle these queues?

How AMD Handles Multiple Queues

The earliest design that could take advantage of multiple queues was GCN. With GCN it's fairly straight forward. What feeds the execution units in GCN are command processors. One of them is a graphics command processor which only handles graphics tasks. The other is the Asynchronous Compute Engine (ACE), which handles scheduling all other tasks. The only difference between the two is the access to which parts they can reserve for work.[5] The graphics command processor can reserve all execution units, including fixed function units meant for graphics. The ACE can only reserve the shader units themselves. A typical application could have three queues: one for graphics work, one for compute work, and one for data requests. The graphics command processor obviously focuses on the graphics queue, while the ACEs focus on the compute and data request queues.

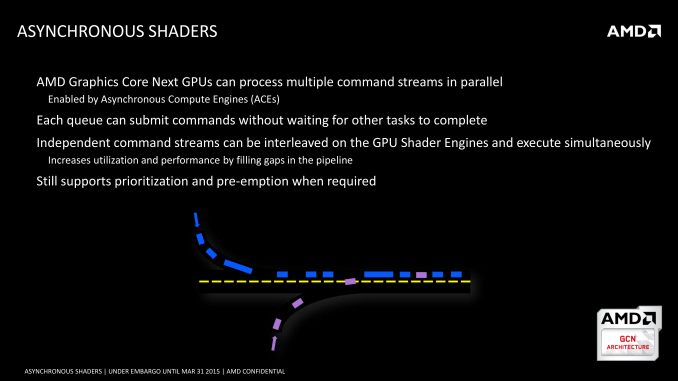

My only issue is how AMD presented their material on asynchronous computing is the diagram they showed to illustrate it:

This works on a timeline view point, but not a resource usage view point. To better understand it from a resource usage point of view, GCN and RDNA can be thought of like a CPU with support for multiple threads, although in this case, the "threads" are Wavefronts, or a group of threads. Each ACE can be thought of a thread. Then you can draw it out like this[6]

How NVIDIA Handles Multiple Queues

The way NVIDIA handles multiple queues isn't as straight forward. To start, let's go back to what could be said is last major revision of GPU design NVIDIA did, which would be the Kepler GPU. There were a few striking things that NVIDIA did compared to the previous GPU, Fermi. These were:

- Doubling the shader units

- Halving the shader clock speed

- Simplifying the scheduler

The third point is where things get interesting. This is the image that NVIDIA provided [7]:

What's probably most interesting is that Kepler uses a "software pre-decode," which oddly sounds like software, the drivers that is, is now doing the scheduling. But that's not what happened. What happened is that NVIDIA found that operations have a predictable amount of time they complete[7]:

QuoteFor Kepler, we realized that since this information is deterministic (the math pipeline latencies are not variable), it is possible for the compiler to determine upfront when instructions will be ready to issue, and provide this information in the instruction itself.

... then they removed the part in hardware that does all of the dependency checking. The scheduler is the same otherwise between Fermi and Kepler[7]:

QuoteBoth Kepler and Fermi schedulers contain similar hardware units to handle scheduling functions,including,(a) register scoreboarding for long latency operations (texture and load), (b) inter-warp scheduling decisions (e.g.,pick the best warp to go next among eligible candidates), and (c) thread block level scheduling (e.g.,the GigaThread engine)

The so-called GigaThread engine still exists, even in Turing[8]

However this doesn't exactly answer the question "does NVIDIA support multiple command queues", which is the basic requirement to do asynchronous compute. Though why bring it up? To establish that NVIDIA's GPUs still schedule work on the hardware contrary to popular belief and NVIDIA GPU's cannot support asynchronous compute. It's just that the work that comes in is streamlined by the drivers to make the scheduler's job easier. Not that it would matter anyway, since the basic requirement to support asynchronous compute is to read from multiple command queues.

So what was the issue? Kepler doesn't actually support simultaneous compute and graphics execution. It can do one or the other, but not both at the same time. It wasn't until the 2nd gen Maxwell did NVIDIA add the ability for hardware to support both graphics and compute at the same time.[9] Yes, technically Kepler and 1st generation Maxwell do not benefit from multiple command queues as they cannot run compute and graphics tasks simultaneously.

Some other things of note

The biggest take away though from introducing multiple command queues is this, from FutureMark.[10]

QuoteWhether work placed in the COMPUTE queue is executed in parallel or in serial is ultimately the decision of the underlying driver. In DirectX 12, by placing items into a different queue the application is simply stating that it allows execution to take place in parallel - it is not a requirement, nor is there a method for making such a demand. This is similar to traditional multi-threaded programming for the CPU - by creating threads we allow and are prepared for execution to happen simultaneously. It is up to the OS to decide how it distributes the work.

But ultimately, because this debate often has AMD proponents claiming NVIDIA can't do asynchronous compute, AMD themselves said this (emphasis added):[11]

QuoteA basic requirement for asynchronous shading is the ability of the GPU to schedule work from multiple queues of different types across the available processing resources.

Thus if one of the huge promoters of asynchronous compute is saying this is the basic requirement, then it doesn't matter what the GPU has as long as it meets this requirement.

Sources, references, and other reading

[2] https://vulkan.lunarg.com/doc/view/1.0.26.0/linux/vkspec.chunked/ch02s02.html

[3] https://www.khronos.org/opengl/wiki/Rendering_Pipeline_Overview

[4] http://developer.download.nvidia.com/assets/gamedev/files/sdk/11/SSAO11.pdf

[5] https://www.anandtech.com/show/4455/amds-graphics-core-next-preview-amd-architects-for-compute/5

[6] https://commons.wikimedia.org/wiki/File:Hyper-threaded_CPU.png

[7] https://www.nvidia.com/content/PDF/product-specifications/GeForce_GTX_680_Whitepaper_FINAL.pdf

[9] https://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/9

[10] https://benchmarks.ul.com/news/a-closer-look-at-asynchronous-compute-in-3dmark-time-spy

0 Comments

There are no comments to display.