How does the CPU/GPU bottleneck work?

The title might be a little strange to anyone who's remotely familiar with performance bottlenecks. But rather than try to explain things on a higher level, where all of the CPU and GPU usage comparisons are done, this explains on a lower level. That is, not only the what is going on, but why it happens.

How Do Performance Bottlenecks work?

To understand how performance bottlenecks, particularly for games, it's important to understand the general flow of games from a programming standpoint. Taken in its simplest form, the steps to running a game are:

-

Process game logic

-

Render image

Of course, we can expand this out to be more detailed:

-

Process inputs

-

Update the game's state (like weather or something)

-

Process AI

-

Process physics

-

Process audio

-

Render image

The one notable thing is rendering the image is one of the last steps. Why? Because the output image represents the current state of the game world. It doesn't make much sense to display an older state of the game world. However to get to the point where the game gets to the render image state, the CPU needs to process the previous steps (though physics may be offloaded to the GPU). This means if this portion of processing takes up too much time, this limits the maximum number of frames that can be rendered in a second. For example, if these steps take 2ms to complete, then expected maximum frame rate is 500 FPS. But if these steps take 30ms to complete, then the expected maximum frame rate is about 30 FPS.

The Issue of Game Flow: Game time vs. Real time

If a developer plans on having a game run on multiple systems, there's a fundamental problem: how do you make sure the game appears to run at the same speed no matter the hardware? That is, how do you get game time must match real time regardless of hardware? If you design a game such that each loop step is 10ms in real time, then you need to make sure the hardware runs the loop in 10 ms or less, otherwise game time will creep away from real time. Likewise, if the hardware can process the game in less than 10ms, you need to make sure the processor doesn't immediately work on the next state of the game world. Otherwise game time will be faster than real-time.

To do that, developers find ways of syncing the game so it matches up with real time.

Unrestricted Processing (i.e., no syncing)

This runs the game without pausing to sync up to real time. While it's simple, this means if the game isn't running on a system it was designed for, game time will never match up to real time. Early DOS games used this method.

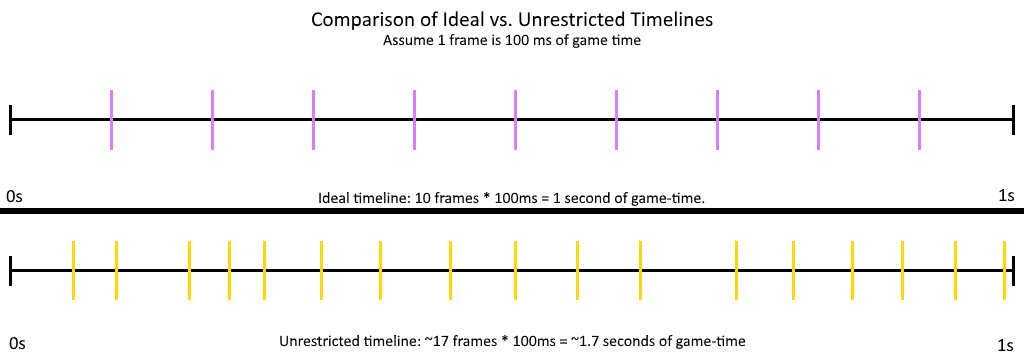

This chart shows a comparison of an "ideal timeline" where the designer wanted 1 frame to be 100ms in real time. The next timeline is when the game is run on a faster system, and so it completes intervals faster. This results in more frames being pushed out and now game time is faster than real time. That is, in the unrestricted timeline, 1.7 seconds of game time has passed, but is being squeezed into 1 second of real time. The result is that the game runs faster than real-time

Fixed Interval Processing, With an Image Rendered Every Tick

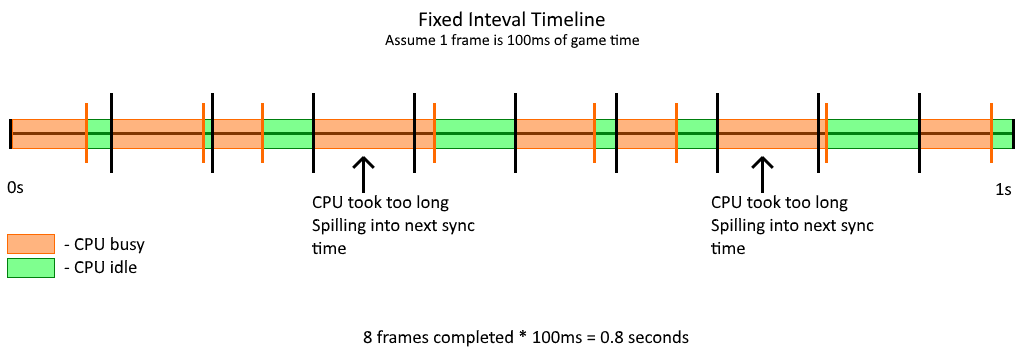

The loop is run at a fixed interval. If the CPU is done early, the CPU idles for the rest of the interval. However, if the CPU takes too long, processing spills into the next interval to be completed, and then it idles. Note that the image is not rendered until the CPU is done with its work. If the CPU is late, the GPU simply displays the last frame it rendered.

In this chart, we have a scenario where the CPU took too long to process game logic and so it spills into the next interval. If a frame is meant to represent 100ms of game time, this scenario completed 8 frames, resulting in a game time of 0.8s over a real-time period of 1s. The result is the game runs slower in real-time. Note: this is not how V-Sync works. V-Sync is a forced synchronization on the GPU. That is, the GPU will render the frame anyway, but will wait until the display is done showing the previous frame before presenting it.

Chances are for 8-bit and 16-bit systems, if it isn't using unrestricted time syncing, it's using this. A convenient source of a time interval is the screen's refresh rate. Modern game consoles and other fixed-configuration hardware may also still use this because it's still easy to implement. If such a game gets ported to the PC and its time syncing wasn't updated, this can cause issues if a 60FPS patch is applied.

Here's a video showing how the SNES used this method of syncing:

Variable Intervals

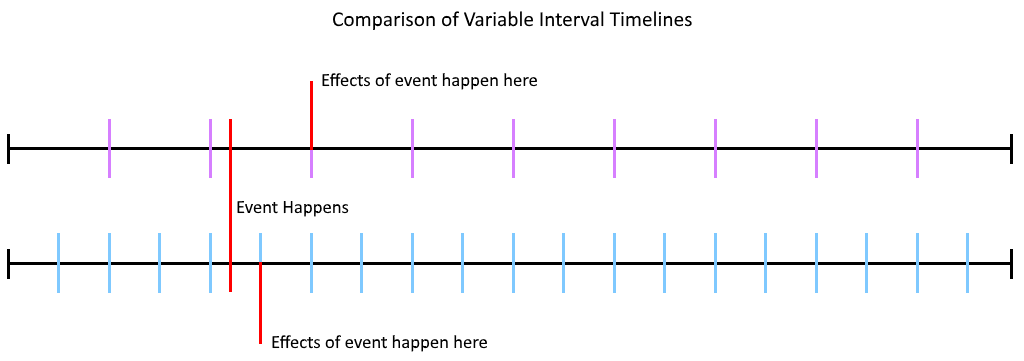

Instead of demanding a fixed interval, why not have an interval that's determined based on the performance of the CPU? While this can guarantee that game-time is the same as real-time, it presents a problem: now the game's physics isn't consistent. The thing with physics is that a lot of formulas examine a change over time. For example, velocity is position over time. This means that if have two different intervals where things are updated, you'll have two different outcomes.

Say for example we have an object traveling at 10m/s. If we have two intervals, one 100ms (or 1m per tick) and the other 50ms (or 0.5m per tick), the object will be in the same place at any time as long as nothing happens to the object. But let's say the object is about to impact a wall and the collision detection assumes if the object either touches or is "inside" of the wall by the time of the next interval, it's a collision. Depending on where the object is from the wall and the wall's thickness, the object in the longer interval game may appear to have traveled right through the wall because of where it ends up on the next interval.

Another issue is that because physics are calculated using floating point numbers, its inherit errors will compound readily with more calculations. So this means that the faster interval game may come to a different number because it's calculating numbers that have accumulated more errors.

Essentially, the physics and interaction of the game are no longer predictable. This has obvious issues in multiplayer, though it can also change how single player game mechanics work.

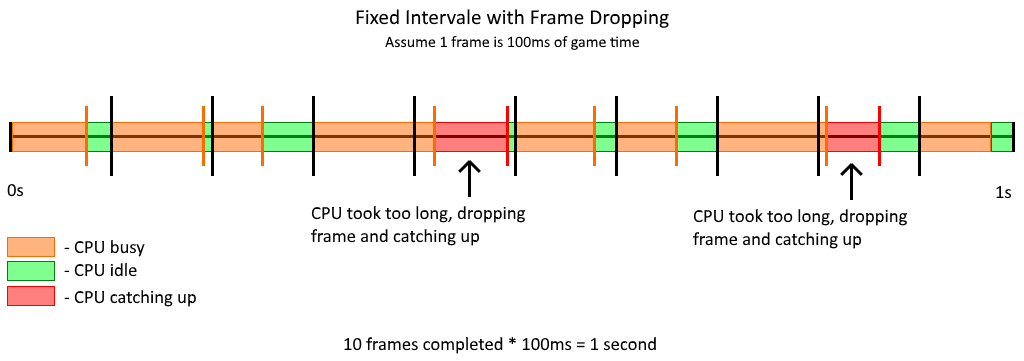

Fixed Intervals, but Drop Frames if the CPU Needs to Catch Up

The game is run in a fixed interval, but instead of requiring that the GPU renders an image after every game tick, if the CPU is late, don't tell the GPU to render a frame and instead use the free time to catch up. Once the CPU is caught up, then allow the GPU to render the next image. The idea is that the CPU should be able to catch up at some point due to the variable load during the game and this load is not making the game always in the "CPU needs to catch up" phase. This allows systems of varying performance to run the game while keeping up with real time.

Modern game engines these days use this method since it allows for stability and determinism, while allowing flexibility of rendering times. For the most part, the interval comes from a timer so that when it expires, servicing it becomes a high priority.

Looking at a few scenarios: What happens when the GPU can complete its task faster or slower relative to when the CPU does?

The following will be looking at a few scenarios on when game-time ticks are processed and when frames are rendered. One thing to keep in mind is that a rendering queue is used so that if the CPU does take a while, the GPU can at least render something until the CPU is less busy. You might know this option as "render ahead" or similar. With a longer render queue, rendering can be smooth at the expense of latency. With a shorter queue, latency is much shorter but the game may stutter if the CPU can't keep up.

With that in mind, the charts used have the following characteristics:

-

Processing a game tick or rendering a frame is represented by a color. The colors are supposed to match up with each other. If the CPU or GPU is not doing anything, this time is represented by a gray area.

-

Assume the render queue can hold 3 commands.

-

For the queue representation, frames enter from the right and go to the left. They exit to the left as well:

-

The render queue will be shown at each interval, rather than when the CPU finishes up processing a game tick.

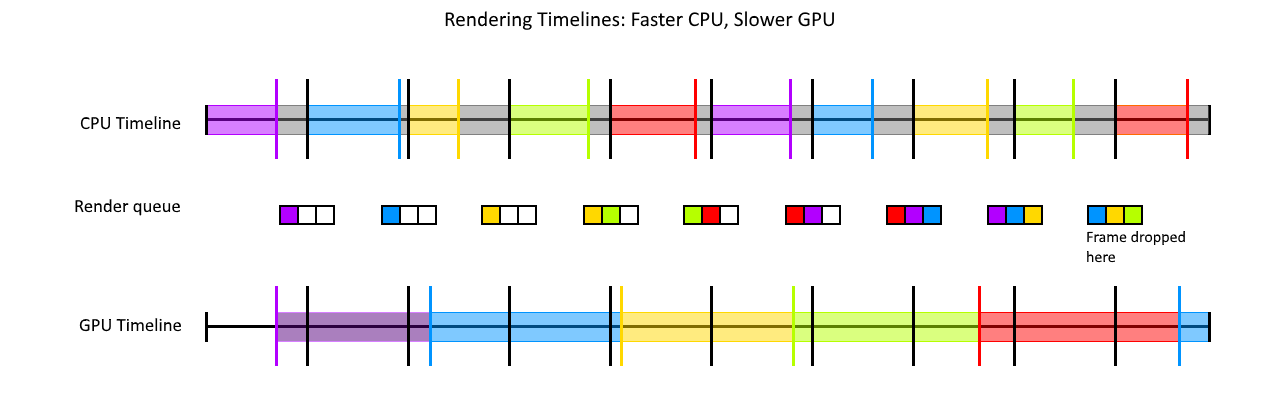

CPU can complete its work within an interval, GPU is slower

This is a case where the CPU can complete its work without spilling over into the next interval, but the GPU takes much longer to generate a frame.

Note that 10 game-ticks were generated, but only 5 frames were rendered. The queue also at the end filled up and since the GPU couldn't get to the next frame, the queue had to drop a frame. In this case, the second purple frame was queued up but it had to be dropped at the end since the GPU could not get to it fast enough.

This is also why the GPU cannot bottleneck the CPU. The CPU still processes the subsequent game ticks without waiting for the GPU to be done. However, if Fixed Interval Processing while forcing the game to render an image every tick is used, then the GPU can bottleneck the CPU. But since most PC games don’t use that method, we can assume it’s not an issue.

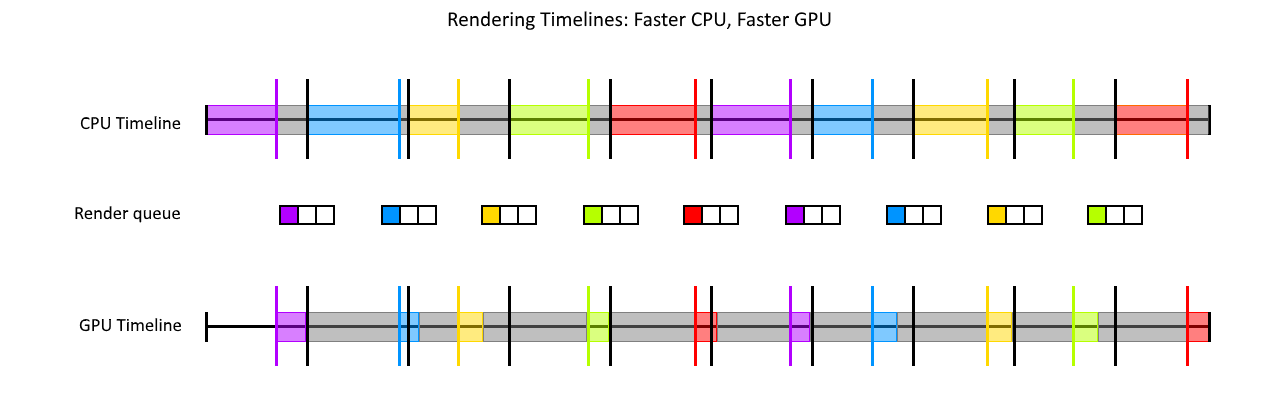

CPU can complete its work within an interval, GPU is faster

In this case, the GPU can easily render a frame, so the GPU is ready to render a new frame as soon as the current game tick is processed.

(Note: technically the queue would be empty the entire time)

This is not a CPU bottleneck condition however, as the game is designed around a fixed interval. Some game developers may design their game loop so it runs at a much faster interval than 60Hz so that high-end GPUs don't have idle time like this. But if the GPU can keep up with this and the interval is a lower frequency, then performance can be smooth or stuttering, depending on the CPU processing times.

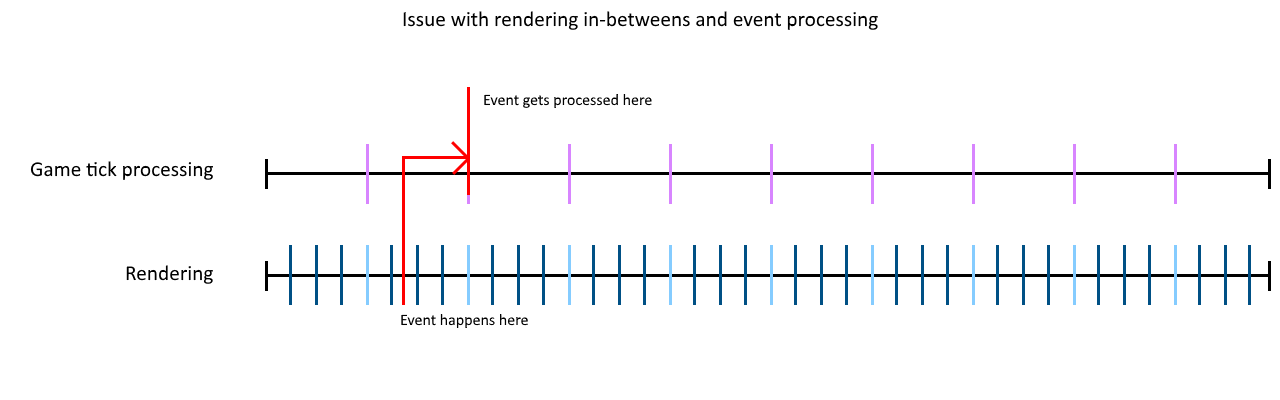

Some games may allow the CPU to generate GPU commands to render “in-between” frames and use the time between the render command and the last game tick to represent how much to move objects. Note that these extra frames are superfluous, meaning your actions during them have no impact on the state of the game itself until the next game-tick.

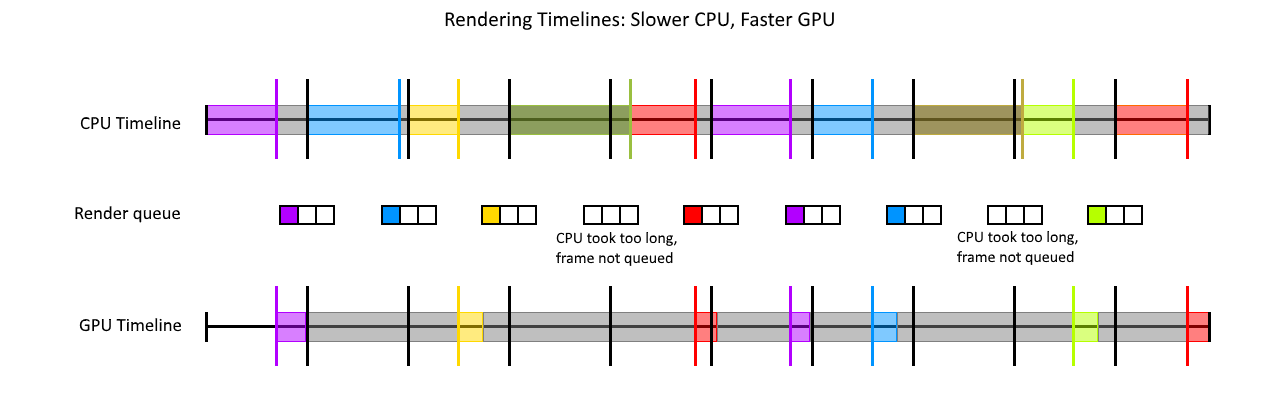

CPU cannot complete its work within an interval, GPU is faster

In this scenario, the CPU has issues keeping up with processing the game logic, so it drops the frame in favor of catching up. This has the effect of not queuing up a frame in the first place and the GPU is stuck repeating the last frame it rendered. However, when the CPU catches up and queues up a frame, it's representing the last game tick.

(Note: technically the queue would be empty the entire time)

In this case, because the first green frame took too long, it doesn't get queued and so the GPU continues to show the yellow frame. The CPU catches up on the first red frame which the GPU will render. A similar thing happens on the game tick of the second yellow frame.

Out of the other scenarios, this one is probably the least favorable one. Notice how frames can be clumped up with longer pauses between them. This causes the feeling of the game stuttering.

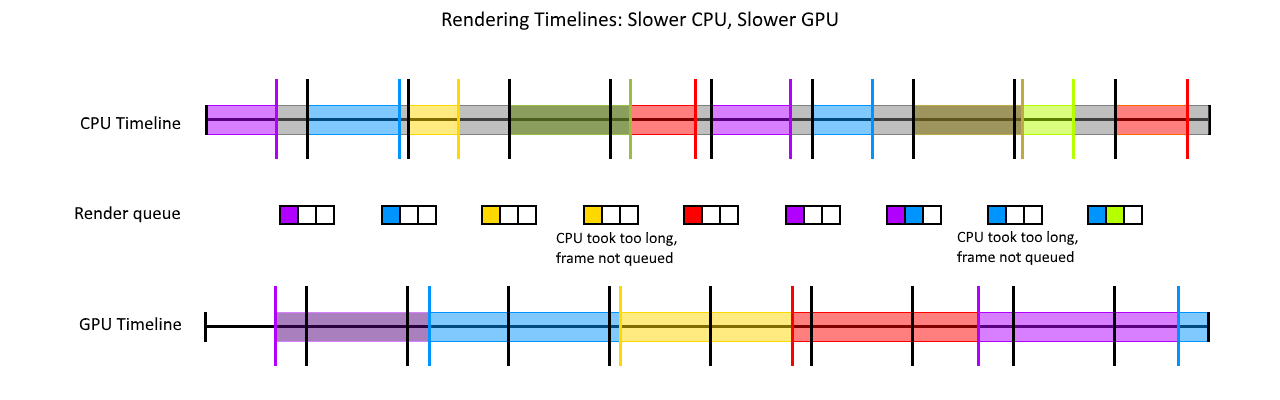

CPU cannot complete its work within an interval, GPU is slower

This is a case where the CPU has trouble completing every tick within an interval and the GPU has issues rendering a frame within an interval as well:

This scenario, depending on how fast the frame rate actually is, may not be as bad as it looks, as it spreads the frames out over time.

Further Reading

For further reading, I picked up most of this information from:

https://bell0bytes.eu/the-game-loop/

https://gameprogrammingpatterns.com/game-loop.html

- revdv and LukeSavenije

-

1

1

-

1

1

1 Comment